The Tello drone is a fun new toy that lets you go beyond the remote controls and hack it using an API.

My earlier experiences with remote controlled drones hasn’t been that great. To gain an expertise, they required more hand eye coordination and control than I was willing to devote to the hobby. So for me, Tello was a game changer. The price of the Tello drone with 2 additional batteries was a bit cheaper than: Sushi for two with a couple bottles of cold Saki.

The Tello is a stable drone responsive to your controls. You can launch it from the ground or toss it gently up into the air, where it hovers waiting for your next command. Tello uses it’s camera and internal Visual Positioning System (VPS) to provide aerial stability and smooth landings. Tello embeds the Intel Movidius Myriad 2 Video Processing Unit (VPU). The VPU handles object recognition, allowing the drone to respond to objects while in flight and hand gestures.

Tello has several builtin stunts such as: 8 different directions for flips, easy photo modes, lands into the palm of your hand, bounce mode and throw-and-go. The stunts are easy to run and are performed quite well by Tello.

When Tello powers up it creates a wifi network which you connect to with your phone or other computer. Tello is controlled by sending it messages using UDP multicast. UDP is a faster protocol that TCP, at the expense of possibly losing some information. When the command and control information is sent continuously, a few lost packets are likely to be superseded by newer ones, negating the loss.

When you’re ready to move beyond the standard built in controls, you can hack Tello using the SDK provided by Ryzerobotics. To get started I watched a couple YouTube videos posted by Heliguy and HalfChrome. The videos will help you get started with the MIT Scratch2 programming lab, which uses a JavaScript module and NodeJS to interface with the Tello. Scratch is a great environments for kids learning to program and control Tello.

The SDK also includes a snippet of Python code which you can use to interact with Tello faster, with less heavy lifting. The Python code is the core interface from which larger applications can be written. The raw UDP socket interface example in Python can easily translate into other languages such as Go, Java, JavaScript and C/C++.

The SDK also gives some hints about creating a UDP server which can listen for live streaming video feed from Tello. I show the Python snippet below.

#

# Tello Python3 Control Demo

#

# http://www.ryzerobotics.com/

#

# 1/1/2018

import threading

import socket

import sys

import time

import platform

host = ''

port = 9000

locaddr = (host,port)

# Create a UDP socket

sock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

tello_address = ('192.168.10.1', 8889)

sock.bind(locaddr)

def recv():

count = 0

while True:

try:

data, server = sock.recvfrom(1518)

print(data.decode(encoding="utf-8"))

except Exception:

print ('\nExit . . .\n')

break

print ('\r\n\r\nTello Python3 Demo.\r\n')

print ('Tello: command takeoff land flip forward back left right \r\n up down cw ccw speed speed?\r\n')

print ('end -- quit demo.\r\n')

#recvThread create

recvThread = threading.Thread(target=recv)

recvThread.start()

while True:

try:

python_version = str(platform.python_version())

version_init_num = int(python_version.partition('.')[0])

# print (version_init_num)

if version_init_num == 3:

msg = input("");

elif version_init_num == 2:

msg = raw_input("");

if not msg:

break

if 'end' in msg:

print ('...')

sock.close()

break

# Send data

msg = msg.encode(encoding="utf-8")

sent = sock.sendto(msg, tello_address)

except KeyboardInterrupt:

print ('\n . . .\n')

sock.close()

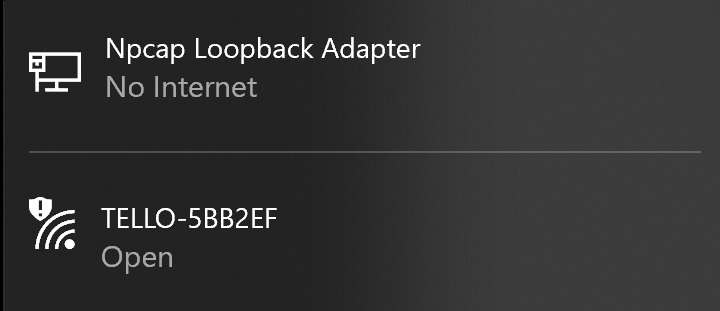

breakTo run the code I connected my Windows 10 laptop to the Tello network.

After connecting to the network I ran the Python snippet, launched the Tello and safely landed.

You’ll find the commands in the Tello SDK Documentation. You can experiment capturing live video by sending Tello streamon, after you’re done capturing send the streamoff.

It took me a while to get this working. After a lot of failed experiments, I discovered my Windows 10 laptop was misdirecting the stream, even though wireshark showed it arriving on UDP port 1111. I was able to run ffmpeg without issues on Windows 7, capture the stream to an mp4 file and play it using VLC. This experiment is probably better done using Linux.

c:\> ffmpeg -i udp://0.0.0.0:11111 -vcodec libx264 output.mp4In the next section I review what other ports I found open on Tello and looked at the packets exchanged using wireshark.

Here’s the results of an nmap scan of the Tello, showing an TCP connection on port 9999, which might be an abyss web server:

C:> nmap -Pn 192.168.10.1

Starting Nmap 7.80 ( https://nmap.org ) at 2019-12-29 10:08 Eastern Standard Time

Nmap scan report for 192.168.10.1

Host is up (0.025s latency).

Not shown: 999 closed ports

PORT STATE SERVICE

9999/tcp open abyss

MAC Address: 60:60:1F:**:**:**(SZ DJI Technology)

Nmap done: 1 IP address (1 host up) scanned in 25.66 secondsI was able to connect from both my phone and laptop at the same time. In the process of doing so I launched Tello but wasn’t able to land (until the battery ran down). It seems I may have caused a problem sending commands from two locations?

Note the security advisory below:

If you’re playing in an area where vast amounts of beer are consumed, or around tech savvy buddies who enjoy messing with you, you might want to consider changing the WIFI SSID and adding a password to keep them out. See the app’s config section.

I hope this post gave you a good feel for the Tello and it’s capabilities. If I delve any deeper, it will probably be to code a UDP stream reader client using Go and record the live UDP video stream on my laptop.